Stating interest in pledging $8k if we're close to the funding bar!

3

[CLOSED] Arkose may close soon

Not fundedGrant

$0raised

We are no longer requesting funding at this time.

Arkose is an AI safety fieldbuilding organisation that supports experienced machine learning professionals — such as professors and research engineers — to engage with the field. We focus on those new to AI safety, and have strong evidence that our work helps them take meaningful first steps.

Since December 2023, we’ve held nearly 300 one-on-one calls with mid-career machine learning researchers and engineers. In follow-up surveys, 79% reported that the call accelerated their involvement in AI safety[1]. Nonetheless, we’re at serious risk of shutting down in the coming weeks due to a lack of funding. Several funders have told us that we’re close to meeting their bar, but not quite there, leaving us in a precarious position. Without immediate support, we won’t be able to continue this work.

If you're interested in supporting Arkose and would like to learn more, please reach out here or email victoria@arkose.org.

What evidence is there that Arkose is impactful?

AI safety remains significantly talent-constrained, particularly with regard to researchers who possess both strong machine learning credentials and a deep understanding of existential risk from advanced AI.

Arkose aims to address this gap by identifying talented researchers (e.g., those with publications at NeurIPS, ICML, and ICLR), engaging them through one-on-one calls, and supporting their immediate next steps into the field.

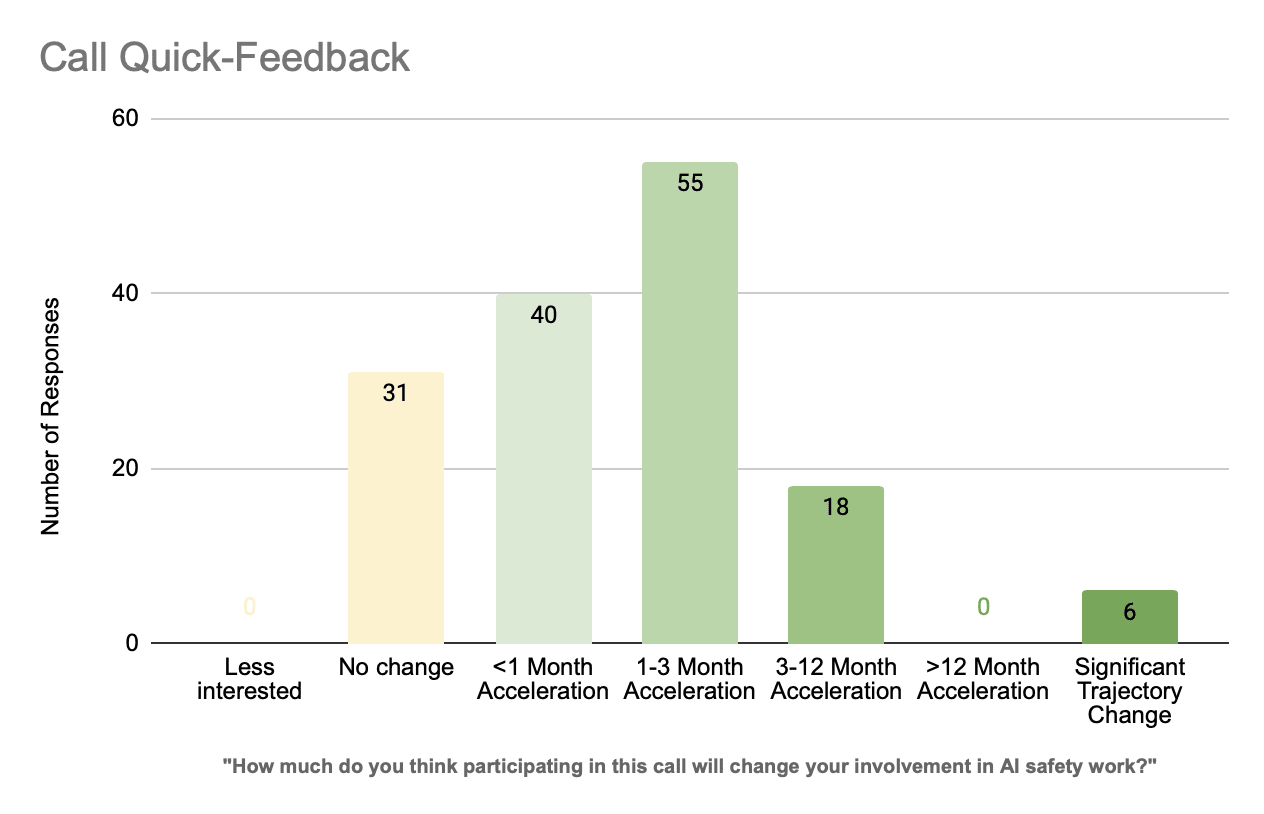

Following each call, we distribute a feedback form to participants. 52% of professionals complete the survey, and of those, 79% report that the call accelerated their involvement in AI safety:

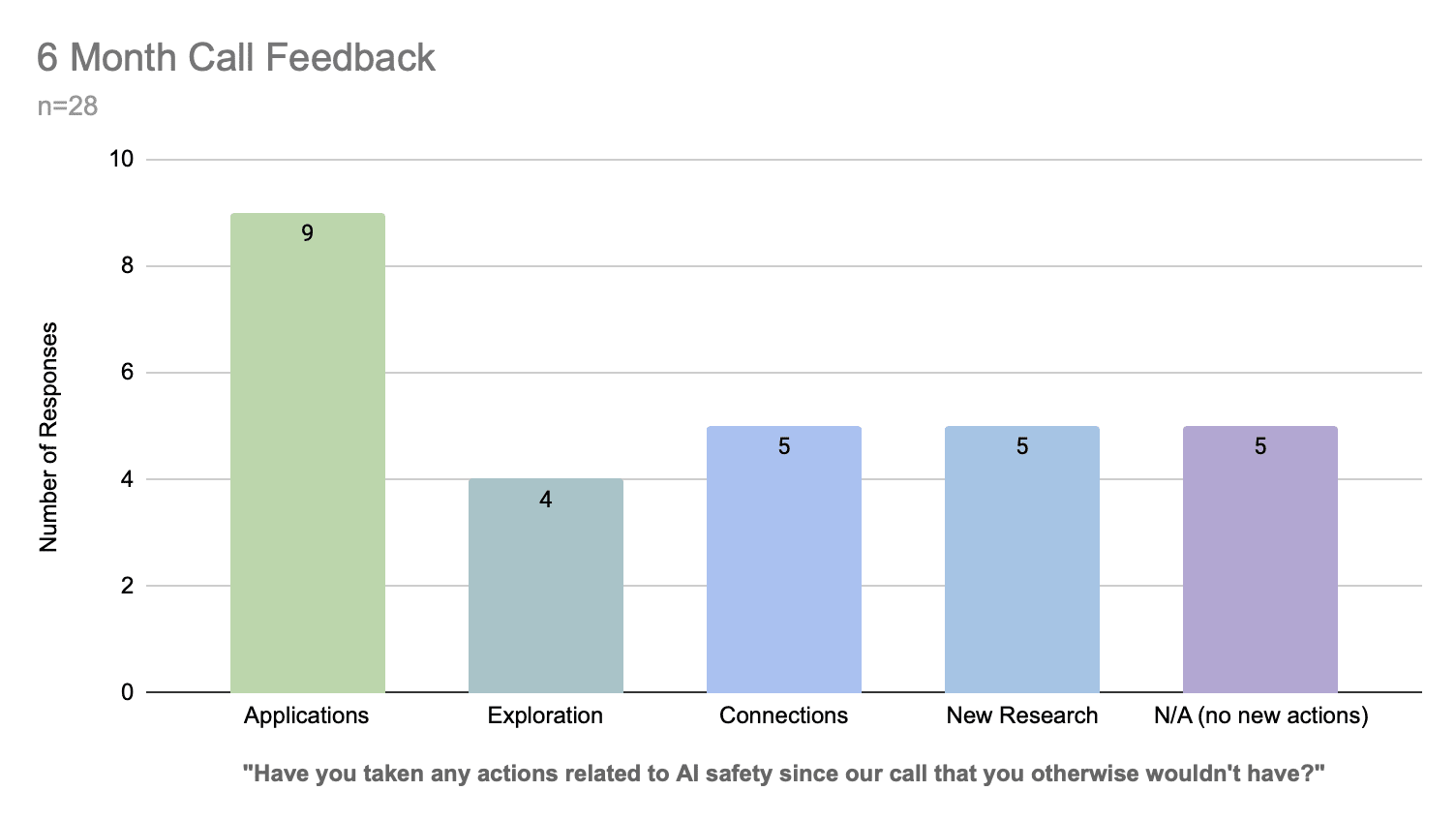

We also send a short form 6 months later, which demonstrates that professionals take a variety of concrete actions following their call with us:

We think that accelerating a senior machine learning professional's involvement in AI safety (e.g. a professor) by 1-3 months is a great outcome, and we're excited about the opportunity to improve our call quality over the next year and support researchers even better.

Unfortunately, we're not able to share all of our impact analysis publicly. If you're interested in learning more (for instance, if you'd like to see summaries of particularly high or low impact calls), please reach out to victoria@arkose.org.

What would the funding allow you to achieve?

We need a further $200,000 minimum, which would allow us to run (along with our remaining runway) for a year at our current size. During this time period, we expect to be able to run between 300 and 800 more calls with senior researchers while improving our public resources and expanding our support for researchers following the call.

How can I help?

At present, we're only seeking funding at or above our minimum viable level. That said, we're still enthusiastic about small donors — and may be able to continue if enough are willing to contribute. We're using Manifund's system to ensure donations only go through if we reach our minimum bar; otherwise, donors will be refunded.

We believe Arkose is a promising, cost-effective approach to addressing one of AI safety’s most pressing bottlenecks, and remain excited about this work. With additional funding, we’re in a position to continue reaching high-impact researchers, deepen our post-call support, and refine our approach. Without it, we will be forced to wind down operations in the coming weeks. If this work aligns with your interests, please do get in touch -- the next few weeks will be a critical time for us.

[1] Of 51% who gave feedback immediately after their call.

Carmen Csilla Medina

10 months ago

I also have another question: Do you happen to have any data on the demographics of the people you have successfully reached and supported? I would be mainly interested if you were able to tap into global talent (that is, talent outside of main AIS hubs, such as the UK, US, Europe) because that relates to my own work at Condor Initiative :)

Arkose

10 months ago

@CarmenCondor Unfortuanately we don't track this information well. I was able to get some data on 56% of our calls (mostly the direct outreach calls). Of these, 90% were in the US, UK, or Europe. This means we've had 17 calls with researchers and engineers outside of these areas, including in China, Korea, and Singapore. There may be some inaccuracy in these statistics as it's not a key metric for us, but I do expect them to be broadly indicative.

Carmen Csilla Medina

10 months ago

Thanks a lot for the write-up!

Skimming through this post + your website, the following thing was not completely clear to me:

Do you mainly target ML experts who are already interested in pursuing AIS careers (and thus you accelerate their progress) or do you also do outreach to ML experts who have not considered it as a career (either due to lack of knowledge or due to lack of initial interest)?

If it's the latter, how do you go about nudging people towards AIS?

If it's the former, how do you identify ML experts who know about AIS but need support to transition (do you e.g.advertise yourself at ML conferences)?

Arkose

10 months ago

@CarmenCondor Hi Carmen, great question!

We reach out directly to researchers who've submitted to top AI conferences as well as speaking with those who are already interested in AI safety (evia referrals or direct applications through our website).

62% of our calls are sourced from direct outreach via email to researchers and engineers who've had work accepted to NeurIPS, ICML, or ICLR. As assessed by us after the call, 46% of the professionals on these calls had no prior knowledge of AI safety, and a further 25% were 'sympathetic' (e.g. had heard vague arguments before, but were largely unfamiliar). On these calls, we focus on an introduction to why we might be concerned about large-scale risks from AI before discussing technical AI safety work relevant to their background, and covering ways they could get involved.

The remainder of our calls come from a variety of sources, but are broadly more familiar with AI safety, coming from places like the MATS program or 80,000 Hours. We identify those who are in need of support primarily through self-selection, but also through a referral process from these organizations. On these calls, our value-add is having an in-depth understanding of the needs of AI safety organisations and recommending tailored, specific next steps to get involved.

Carmen Csilla Medina

10 months ago

@Arkose, Super interesting, thank you.

If it's not a trade secret: What is your pitch to those who are not self-selected or referred? (E.g a pitch about applying their skills to make a positive impact in the world)

I don't know this target group very well but I assume they tend to already have a stable job so "career coaching" would not necessarily appeal to them. However, I also know that many mid-career professionals experience some existential crisis if they feel that their work is not making a positive impact in the world.

Arkose

10 months ago

@CarmenCondor Unfortunately this varies a lot depending on who I'm speaking with, so it's hard to summarise.

I agree that the "career coaching" frame is not always appropriate, especially for academics. Often, I find it useful to emphasise both the potential for positive impact and the simple legitimacy of the work -- for many, it's useful to highlight that this is a serious field of research which can be published in top conferences and which can get funded. This often involves some more technical discussion of the areas of overlap with their research; I find AI safety is now broad enough there is often some area of overlap. With professors especially, I will often discuss any currently-open funds which might be relevant to their work, and encourage them to check our opportunities page when seeking funding in the future.

Carmen Csilla Medina

10 months ago

@Arkose , Sounds like a great way to go about! Though I assume the initial filtering needs to be at least moderately strong since such individually tailored outreach can be time-consuming (but a good investment).

Arkose

10 months ago

@CarmenCondor Just to clarify -- our initial outreach email is only very coarsely personalised (e.g. based on whether they are in academia vs industry). I'm describing the pitch I would give somebody on a 1:1 call.